A thrifty way to collect easily-segmented high-speed video.

The Kazoo Project

Algorithms for multiplayer video-based musical instruments.

This project's goal was to make a super-expressive musical controller that used cheap components and some fancy machine learning algorithms. I wanted a controller that was completely trainable by dancing and listening to music: a computer watches someone dancing, then listens to music, and finally creates an mapping from visual motions to sounds (i.e. unsupervised learning).

Hardware.

I started with the highest bandwidth-per-dollar computer interface device: a webcam.

Specifically the PS3 eye is at an awesome sweet spot, delivering 18MB/sec of video data via USB, at 125 frames per second, with 8-20ms latency and <1ms jitter, and costing only about $30.

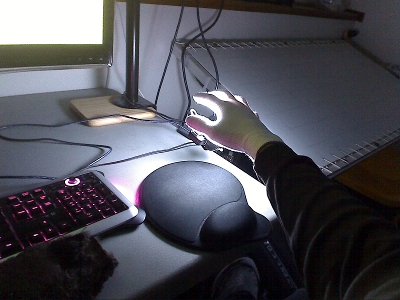

I then added a ~$5 LED light ring and a ~$5 white glove, and had a perfect desk-mounted controller.

The only thing left was software.

A thrifty way to collect easily-segmented high-speed video.

Algortihms.

I used a 5-stage process to transform video to audio.

video -> 1. extract features -> 2. linearize -> 5. optimal

linear

audio <- 5. extract features <- 4. linearize <- matching

The outer stages (1 and 5) are approximately-reversible feature extraction, where optical flow features are added to video, and sound is transformed to a log(frequency) energy spectrum. The next stages (2 and 4) linearize the feature space of the video and audio features, using learning soft vector quantization [yes that is a 4-word phrase]. These are trained with a big ole' EM clustering algorithm that runs on a 512-core GPU (providing a great excuse to learn to program in CUDA C). Training takes a few hours, but only needs to be done once on each data set (not once on each audio,video pair).

The trickiest part was the middle stage witch connects the linearized video signal to the linearized audio signal. I l1-normalized the signals and considered them as 10000-dimensional discrete probability distributions [yes there are 4 zeros]. Assuming the linearization stages have done their job, I used a simple linear transformation here, via a 10000x10000 matrix. To learn a transformation that respected both the distribution and the dynamics of the signals in each space, I set up an objective function that minimized the relative entropy between time-shifted copies of the signals. That led to a 100000000-dimensional optimization problem (thank goodness for GPUs!) and a surprisingly tractable objective function: a couple sparse matrix-matrix multiplies and a single dense matrix-matrix multiply per iteration. I used CUBLAS and CUSPARSE on the GPU and OpenMP and Eigen3 for multicore CPU linear algebra.

Data.

I collected a couple hours of training data-- videos of my hand dancing to Tipper's Relish the Trough.

The larger B&W videos are downsampled from the webcam, and the smaller colored videos include optical flow estimates from a smoothed Cox filter.

I used the smaller videos as feature vectors to train a prototype music controller interface.

The controller didn't work very well, but the videos might make fun VJ material.

Here they are: 5x slowed down, low-res on vimeo, hi-res on links, and freely public domain.

LICENSE: all the gloves videos are public domain.

Results

The final version of the controller was still difficult to use.

I did have some fun "conducting" the Bulgarian Women's Choir, but it seemed like the soft vector quantizer was quantizing too coarsely, even with ~10000 clusters.

On the one hand, local PCA might reduce the data dimension more accurately than soft vector quantization, but on the other hand, it would also make the distribution+dynamics matching problem much more difficult.

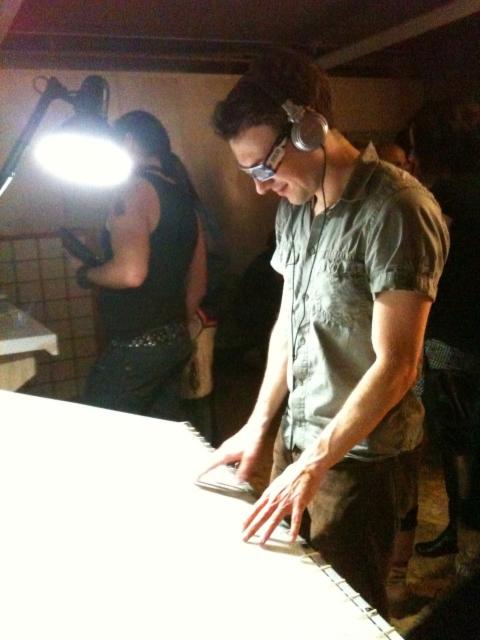

The shadowboard is a large multi-touch surface with low latency tracking, mostly used for controlling synthesized sound. Supported by a sexy anodized aluminum frame, the 24x48 inch translucent surface is sensitive to both shadows and impacts, enabling spatially precise yet low-latency control. The shadowboard is also unusual among multitouch tables in that it can track finger tips in 3D well above the table surface.

The shadowboard debuted at LoveTech's 2 year anniversary.

LoveTech 2Yr Digital Jam Lounge & Interactive Art Zone from Rich DDT on Vimeo.